Instagram will let you appeal post takedowns

Instagram isn’t just pretty pictures. It now also harbors bullying, misinformation and controversial self-expression content. So today Instagram is announcing a bevvy of safety updates to protect users and give them more of a voice. Most significantly, Instagram will now let users appeal the company’s decision to take down one of their posts.

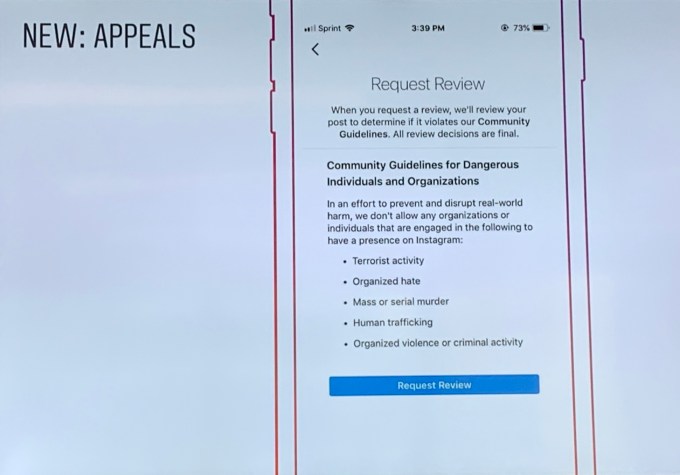

A new in-app interface (rolling out starting today) over the next few months will let users “get a second opinion on the post,” says Instagram’s head of policy, Karina Newton. A different Facebook moderator will review the post, and restore its visibility if it was wrongly removed, and they’ll inform users of their conclusion either way. Instagram always let users appeal account suspensions, but now someone can appeal a takedown if their post was mistakenly removed for nudity when they weren’t nude or hate speech that was actually friendly joshing.

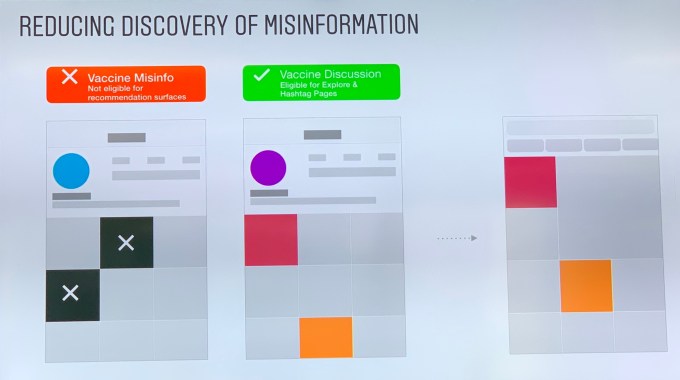

Blocking vaccine misinfo hashtags

On the misinformation front, Instagram will begin blocking vaccine-related hashtag pages when content surfaced on a hashtag page features a large proportion of verifiably false content about vaccines. If there is some violating content, but under that threshold, Instagram will lock a hashtag into a “Top-only” post, where Recent posts won’t show up, to decrease visibility of problematic content. Instagram says that it will test this approach and expand it to other problematic content genres if it works. Instagram will also be surfacing educational information via a pop-up to people who search for vaccine content, similar to what it’s used in the past for self-harm and opioid content.

Instagram says now that health agencies like the Center for Disease Control and World Health Organization are confirming that VACCINES DO NOT CAUSE AUTISM, it’s comfortable declaring contradictory information as verifiably false, and it can be aggressively demoted on the platform.

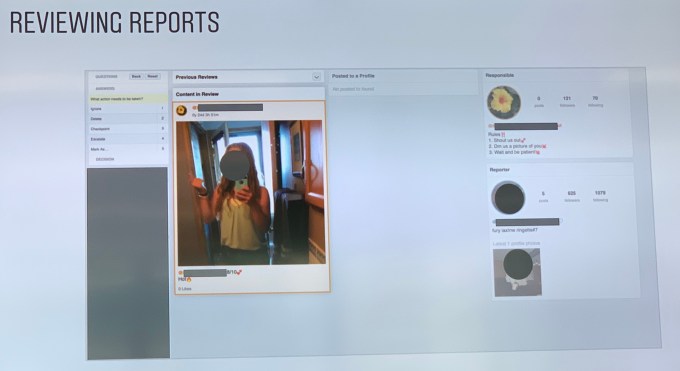

The automated system scans and scores every post uploaded to Instagram, checking them against classifiers of prohibited content and what it calls “text-matching banks.” These collections of fingerprinted content it’s already banned have their text indexed and words pulled out of imagery through optical character recognition so Instagram can find posts with the same words later. It’s working on extending this technology to videos, and all the systems are being trained to spot obvious issues like threats, unwanted contact and insults, but also those causing intentional fear-of-missing-out, taunting, shaming and betrayals.

If the AI is confident a post violates policies, it’s taken down and counted as a strike against any hashtag included. If a hashtag has too high of a percentage of violating content, the hashtag will be blocked. If it had fewer strikes, it’d get locked in Top-Only mode. The change comes after stern criticism from CNN and others about how hashtag pages like #VaccinesKill still featured tons of dangerous misinformation as recently as yesterday.

Tally-based suspensions

One other new change announced this week is that Instagram will no longer determine whether to suspend an account based on the percentage of their content that violates policies, but by a tally of total violations within a certain period of time. Otherwise, Newton says, “It would disproportionately benefit those that have a large amount of posts,” because even a large number of violations would be a smaller percentage than a rare violation by someone who doesn’t post often. To prevent bad actors from gaming the system, Instagram won’t disclose the exact time frame or number of violations that trigger suspensions.

Instagram recently announced at F8 several new tests on the safety front, including a “nudge” not to post a potentially hateful comment a user has typed, “away mode” for taking a break from Instagram without deleting your account and a way to “manage interactions” so you can ban people from taking certain actions like commenting on your content or DMing you without blocking them entirely.

The announcements come as Instagram has solidified its central place in youth culture. That means it has intense responsibility to protect its user base from bullying, hate speech, graphic content, drugs, misinformation and extremism. “We work really closely with subject matter experts, raise issues that might be playing out differently on Instagram than Facebook, and we identify gaps where we need to change how our policies are operationalized or our policies are changed,” says Newton.

Contributer : Social – TechCrunch

Reviewed by mimisabreena

on

Friday, May 10, 2019

Rating:

Reviewed by mimisabreena

on

Friday, May 10, 2019

Rating:

No comments:

Post a Comment