Instagram still doesn’t age-check kids. That must change.

Instagram dodges child safety laws. By not asking users their age upon signup, it can feign ignorance about how old they are. That way, it can’t be held liable for $40,000 per violation of the Child Online Privacy Protection Act. The law bans online services from collecting personally identifiable information about kids under 13 without parental consent. Yet Instagram is surely stockpiling that sensitive info about underage users, shrouded by the excuse that it doesn’t know who’s who.

But here, ignorance isn’t bliss. It’s dangerous. User growth at all costs is no longer acceptable.

It’s time for Instagram to step up and assume responsibility for protecting children, even if that means excluding them. Instagram needs to ask users’ age at sign up, work to verify they volunteer their accurate birthdate by all practical means, and enforce COPPA by removing users it knows are under 13. If it wants to allow tweens on its app, it needs to build a safe, dedicated experience where the app doesn’t suck in COPPA-restricted personal info.

Minimum Viable Responsibility

Instagram is woefully behind its peers. Both Snapchat and TikTok require you to enter your age as soon as you start the sign up process. This should really be the minimum regulatory standard, and lawmakers should close the loophole allowing services to skirt compliance by not asking. If users register for an account, they should be required to enter an age of 13 or older.

Instagram’s parent company Facebook has been asking for birthdate during account registration since its earliest days. Sure, it adds one extra step to sign up, and impedes its growth numbers by discouraging kids to get hooked early on the social network. But it also benefits Facebook’s business by letting it accurately age-target ads.

Most importantly, at least Facebook is making a baseline effort to keep out underage users. Of course, as kids do when they want something, some are going to lie about their age and say they’re old enough. Ideally, Facebook would go further and try to verify the accuracy of a user’s age using other available data, and Instagram should too.

Both Facebook and Instagram currently have moderators lock the accounts of any users they stumble across that they suspect are under 13. Users must upload government-issued proof of age to regain control. That policy only went into effect last year after UK’s Channel 4 reported a Facebook moderator was told to ignore seemingly underage users unless they explicitly declared they were too young or were reported for being under 13. An extreme approach would be to require this for all signups, though that might be expensive, slow, significantly hurt signup rates, and annoy of-age users.

Instagram is currently on the other end of the spectrum. Doing nothing around age-gating seems recklessly negligent. When asked for comment about how why it doesn’t ask users’ ages, how it stops underage users from joining, and if it’s in violation of COPPA, Instagram declined to comment. The fact that Instagram claims to not know users’ ages seems to be in direct contradiction to it offering marketers custom ad targeting by age such as reaching just those that are 13.

Instagram Prototypes Age Checks

Luckily, this could all change soon.

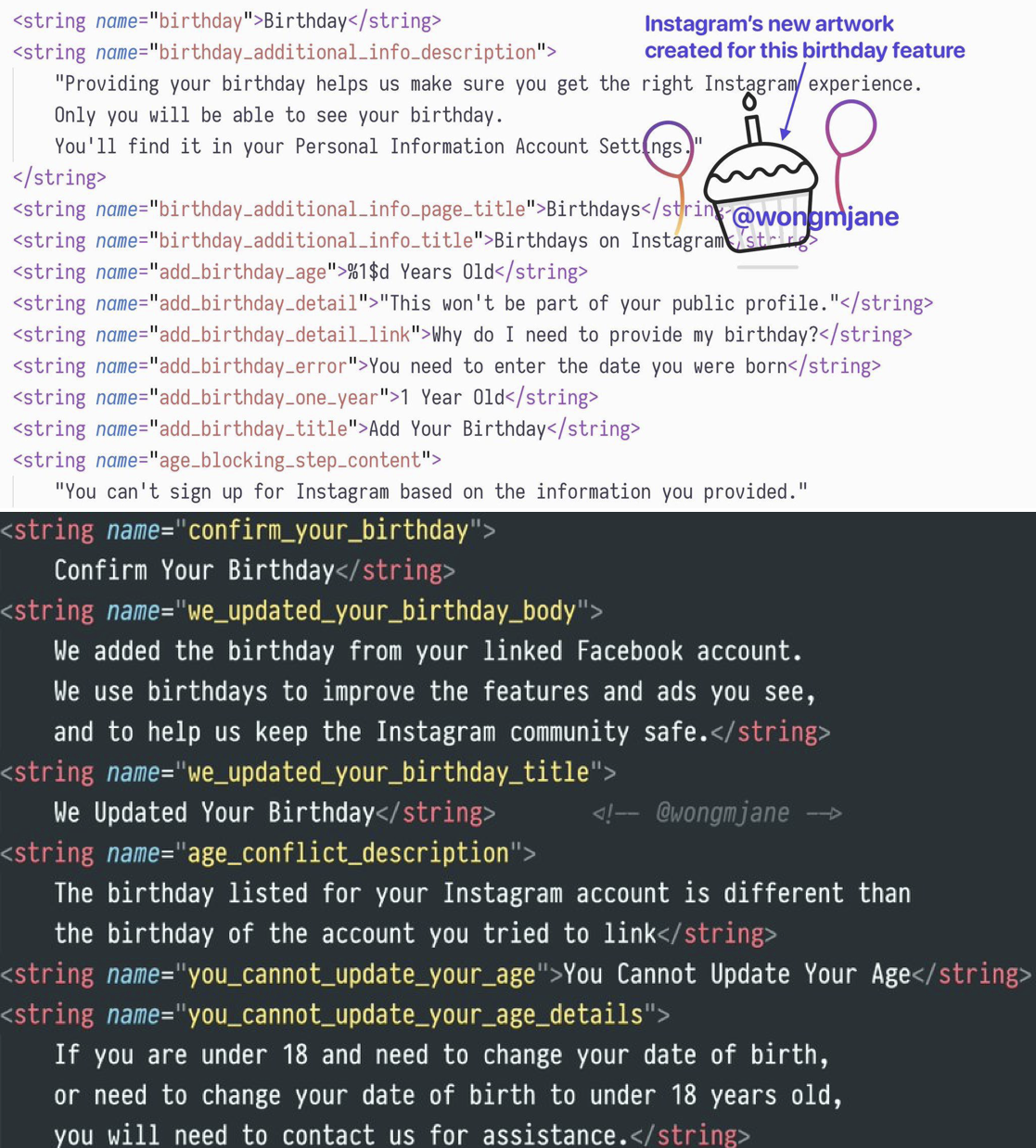

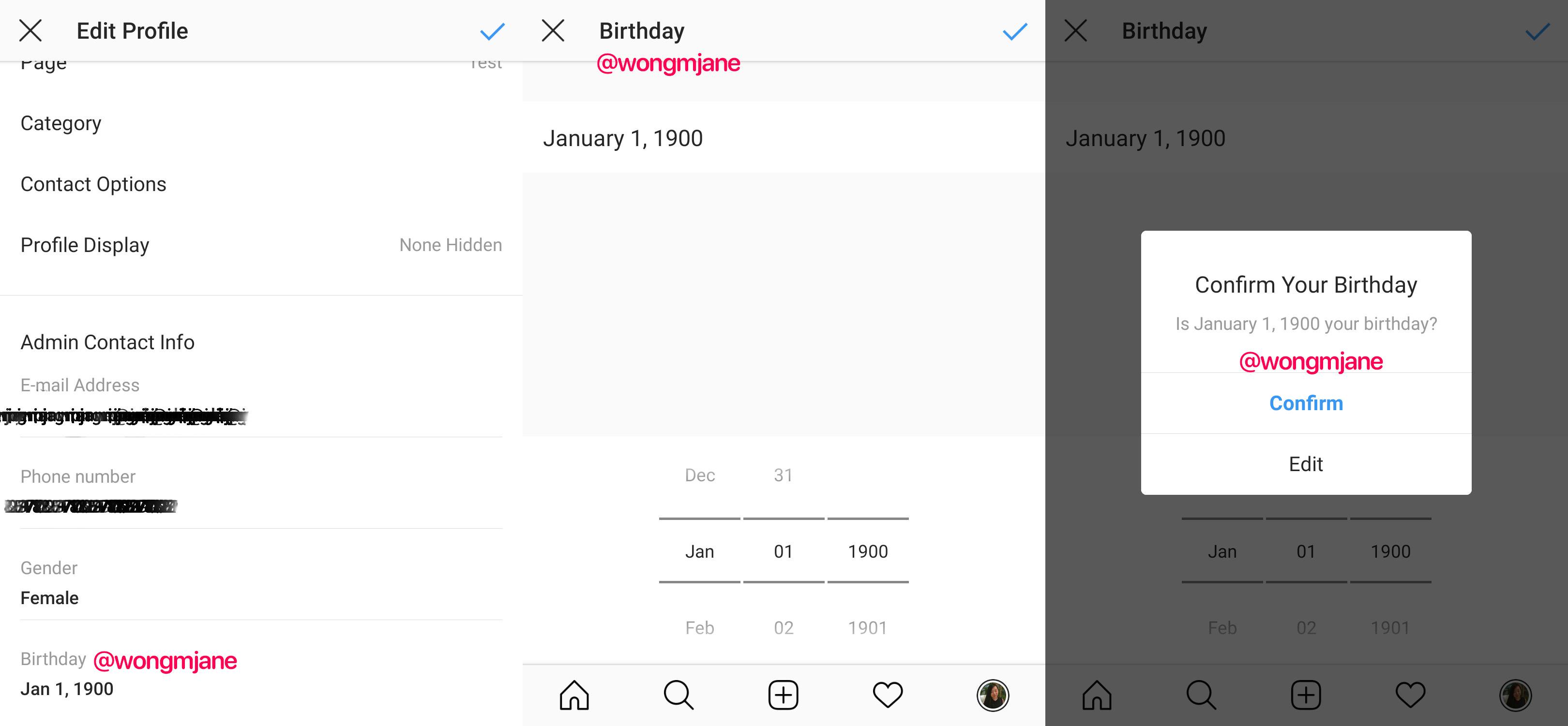

Mobile researcher and frequent TechCrunch tipster Jane Manchun Wong has spotted Instagram code inside its Android app that shows it’s prototyping an age-gating feature that rejects users under 13. It’s also tinkering with requiring your Instagram and Facebook birthdates to match. Instagram gave me a “no comment” when I asked about if these features would officially roll out to everyone.

Code in the app explains that “Providing your birthday helps us make sure you get the right Instagram experience. Only you will be able to see your birthday.” Beyond just deciding who to let in, Instagram could use this info to make sure users under 18 aren’t messaging with adult strangers, that users under 21 aren’t seeing ads for alcohol brands, and that potentially explicit content isn’t shown to minors.

Instagram’s inability to do any of this clashes with it and Facebook’s big talk this year about its commitment to safety. Instagram has worked to improve its approach to bullying, drug sales, self-harm, and election interference, yet there’s been not a word about age gating.

Meanwhile, underage users promote themselves on pages for hashtags like #12YearOld where it’s easy to find users who declare they’re that age right in their profile bio. It took me about 5 minutes to find creepy “You’re cute” comments from older men on seemingly underage girls’ photos. Clearly Instagram hasn’t been trying very hard to stop them from playing with the app.

Illegal Growth

I brought up the same unsettling situations on Musically, now known as TikTok, to its CEO Alex Zhu on stage at TechCrunch Disrupt in 2016. I grilled Zhu about letting 10-year-olds flaunt their bodies on his app. He tried to claim parents run all of these kids’ accounts, and got frustrated as we dug deeper into Musically’s failures here.

Thankfully, TikTok was eventually fined $5.7 million this year for violating COPPA and forced to change its ways. As part of its response, TikTok started showing an age gate to both new and existing users, removed all videos of users under 13, and restricted those users to a special TikTok Kids experience where they can’t post videos, comment, or provide any COPPA-restricted personal info.

If even a Chinese app social media app that Facebook CEO has warned threatens free speech with censorship is doing a better job protecting kids than Instagram, something’s gotta give. Instagram could follow suit, building a special section of its apps just for kids where they’re quarantined from conversing with older users that might prey on them.

Perhaps Facebook and Instagram’s hands-off approach stems from the fact that CEO Mark Zuckerberg doesn’t think the ban on under-13-year-olds should exist. Back in 2011, he said “That will be a fight we take on at some point . . . My philosophy is that for education you need to start at a really, really young age.” He’s put that into practice with Messenger Kids which lets 6 to 12-year-olds chat with their friends if parents approve.

The Facebook family of apps’ ad-driven business model and earnings depend on constant user growth that could be inhibited by stringent age gating. It surely doesn’t want to admit to parents it’s let kids slide into Instagram, that advertisers were paying to reach children too young to buy anything, and to Wall Street that it might not have 2.8 billion legal users across its apps as it claims.

But given Facebook and Instagram’s privacy scandals, addictive qualities, and impact on democracy, it seems like proper age-gating should be a priority as well as the subject of more regulatory scrutiny and public concern. Society has woken up to the harms of social media, yet Instagram erects no guards to keep kids from experiencing those ills for themselves. Until it makes an honest effort to stop kids from joining, the rest of Instagram’s safety initiatives ring hollow.

Contributer : Social – TechCrunch

Reviewed by mimisabreena

on

Wednesday, December 04, 2019

Rating:

Reviewed by mimisabreena

on

Wednesday, December 04, 2019

Rating:

No comments:

Post a Comment